Why Google’s ‘Total Stack’ Strategy Defines the AI End-Game

In the opening weeks of 2026, the global conversation surrounding artificial intelligence has undergone a fundamental shift. The industry has moved beyond the "Intelligence Era", characterised by the frantic race for higher benchmarks and linguistic fluency, and entered the "Industrial Era." In this new phase, the victor is no longer determined by who can build the smartest model, but by who can deliver that intelligence at the lowest marginal cost.

While the cultural zeitgeist remains focused on the rivalry between OpenAI’s GPT-5.3 and Anthropic’s Claude 4.6, a quieter, more structural victory is unfolding. By owning the entire AI value chain, from subsea fibre-optic cables and custom silicon to twenty years of proprietary multimodal data, Google has engineered a "vertical trap." This strategy has effectively turned its rivals into high-paying tenants, while Google operates as the ultimate landlord of the AI economy.

The Silicon Moat: Decoupling from the Nvidia Tax

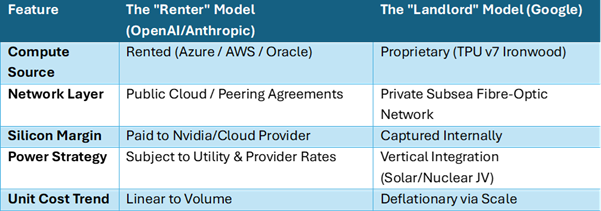

For the past three years, the AI race has been a "GPU war," with every major player competing for the same limited supply of Nvidia Blackwell and Rubin chips. However, in 2026, Google has successfully decoupled itself from this bottleneck. The general availability of the TPU v7 (Ironwood) marks a turning point in compute sovereignty.

Unlike general-purpose GPUs, Ironwood is an ASIC (Application-Specific Integrated Circuit) designed for a singular purpose: tensor computation at massive scale. According to current 2026 performance data, Ironwood delivers a 4x performance-per-dollar advantage over standard cloud-rented GPUs for both training and high-volume inference. By building its own chips, Google has eliminated the "Nvidia Tax", the 60–75% profit margin that rivals like OpenAI must indirectly pay when they rent compute via Microsoft Azure.

Table 1: The Infrastructure Divide (2026 Estimates)

The Data Fortress: YouTube and the Multimodal Edge

As we enter 2026, the "flat web", the text-based data from Reddit, Wikipedia, and news archives, has been largely exhausted. AI developers are facing a quality wall where additional text data no longer yields significant reasoning gains. This is where Google’s second structural advantage becomes insurmountable: YouTube.

For over 20 years, Google has curated the world’s largest archive of high-resolution video, audio, and synchronised metadata. While rivals are embroiled in copyright litigation over "web scraping," Google sits on billions of hours of proprietary content that provides the world’s best training ground for multimodal reasoning. Models like Gemini 3 Deep Think are not just learning from what humans wrote, but from how they move, speak, and interact in the real world. This data isn't just a moat; it is a fortress that cannot be replicated, regardless of how much capital a startup raises.

The Economics of Attrition: The Free Tier Trap

The most brutal reality of the 2026 landscape is the divergence in unit economics. Both OpenAI and Google offer massive "free tiers" to capture user share. However, for a model-only company, every free prompt is a liability, a direct cost paid to their cloud provider.

For Google, a free Gemini prompt is a "marginal cost" operation on hardware they own, powered by energy they often generate, and delivered over fibre they laid. This allows Google to treat AI as a "loss leader," subsidised by its $300 billion advertising engine.

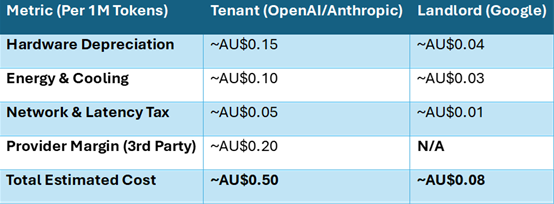

Table 2: Estimated Unit Economics of Inference (2026)

Note: Estimates reflect blended rates for mid-tier reasoning models like Gemini 3 Flash and GPT-5-mini.

This 6x cost advantage means that Google can maintain a higher quality of service for free users indefinitely, while rivals are forced to tighten usage caps or move features behind a paywall to manage their burn rate. In 2025, OpenAI’s market share sat at a dominant 87%. By early 2026, that share has eroded to roughly 60%, as Google’s Gemini has quadrupled its footprint to 21% by leveraging its distribution and lower cost-of-service.

Distribution: The Operating System as a Gatekeeper

The final layer of the stack is the interface. For an independent AI company, the user must make a conscious decision to "go" to their platform. Google, however, has embedded Gemini at the kernel level of three billion Android devices and within the workflow of Google Workspace.

In 2026, AI is no longer a destination; it is an invisible layer. When a user asks their phone to "organise my week," they are not choosing an AI; they are using a feature of their operating system. By controlling the OS, Google ensures that its AI is the first, and often the only, model a user interacts with. This friction-less acquisition is a luxury that "app-based" AI competitors simply cannot afford.

Conclusion: The Strategic Realignment

For high-level professionals and decision-makers, the takeaway is clear: the AI race is no longer an "intelligence competition." If it were, the agility and research prowess of OpenAI or Anthropic might still carry the day. Instead, it has become a competition of industrial efficiency and vertical integration.

Google has spent two decades building the plumbing of the internet. By placing its AI on top of that existing architecture, it has created a cost structure that its competitors cannot match without becoming cloud providers themselves. While OpenAI may still hold the "crown" for the most innovative chef, Google owns the farm, the transport trucks, the stove, and the building where the restaurant is located.

In the long run, the landlord almost always wins the race.

Sources